Let's get one thing straight: skipping website usability testing isn't an option anymore. It's a massive competitive disadvantage. This is all about watching real, actual people use your site to find out where they get stuck, so you can create a smooth experience that directly boosts your revenue and keeps them coming back.

Why Usability Testing Gives You a Real Edge

In a market this crowded, just having a functional website is table stakes. The real win comes from the user experience you provide. So many businesses fall into the trap of thinking their site is intuitive just because their own team can click through it without a problem. Of course they can—they live and breathe the product every day.

That’s a dangerously expensive assumption.

Real insight happens when you watch a first-time user get completely lost trying to find your pricing, ditch a checkout form because it's too confusing, or scroll right past a critical call-to-action. These aren't just minor hiccups; they are gaping holes in your revenue funnel. Every single point of friction makes it more likely a potential customer will just leave and go to a competitor.

Moving Beyond Guesswork to Hard Data

Website usability testing is how you swap out those internal assumptions for undeniable behavioral data. It's the difference between thinking you know what your users want and knowing what they actually do when they land on your page. This evidence-based approach is how you uncover customer pain points that are actively costing you money.

The impact here is huge. For example, solid data shows that proper usability testing can drive a stunning 135% improvement in key performance metrics. And yet, only about 55% of companies are doing any kind of UX testing at all. That gap is a massive opportunity for anyone willing to actually listen to their users. You can dig into more of these UX statistics and trends to see just how wide that gap is.

The Real-World Business Impact

When you commit to regular website usability testing, you’re not just tweaking a website; you're building a more resilient, customer-focused business. The insights you gather fuel a cycle of continuous improvement that adds up over time, creating a serious competitive advantage that's hard to replicate.

Investing in usability isn't an expense. It's a strategic investment in customer retention and brand loyalty. It’s the most direct path to understanding and removing the barriers standing between your user and a conversion.

This process has a direct and measurable effect on the metrics that matter most to your business. Making your site easier to navigate isn't just a "nice-to-have"—it delivers tangible results.

We've seen firsthand how a few targeted improvements, identified through testing, can transform core metrics. The table below illustrates the kind of impact you can expect.

Key Metrics Transformed by Usability Testing

Ultimately, this shows that simply making things easier for your users can have a powerful ripple effect across the entire business, strengthening your bottom line.

Market leaders don't just build products; they build experiences. They get that a seamless user journey is a core driver of growth, turning usability testing from a simple checklist item into a fundamental pillar of their success strategy.

Right, let's get into the nitty-gritty of what happens before you ever sit down with a user. Honestly, the most crucial work in any website usability testing project happens before you hit 'record'. It's the prep phase that separates a test that gives you vague, "nice-to-know" feedback from one that delivers a clear, actionable roadmap for improvement.

Diving in without a plan is like starting a road trip without a map—sure, you're moving, but you're probably not going to end up anywhere useful. This is where we turn fuzzy ideas into a structured, measurable project.

Defining Your Test Objectives

First things first: you need to move past a goal like "see if the site is easy to use." That’s far too broad. We need sharp, specific objectives that are directly tied to business outcomes. This clarity will focus your entire effort and make sure the data you collect is actually powerful.

A strong objective is measurable and gives you a "why" for every task you'll eventually ask a user to do. Think less about general usability and more about the specific user behaviors you want to see.

For instance, instead of a fuzzy goal, try one of these:

- For cart abandonment: Can a new user successfully complete a purchase in under three minutes without needing to use the help chat?

- For feature adoption: Can an existing customer find and grasp the value of our new project management dashboard in less than five minutes?

- For lead generation: Can first-time visitors locate and fill out our "Request a Demo" form with a task completion rate of 90% or higher?

See the difference? These goals give your usability test a clear purpose and make it much, much easier to measure the impact of any changes you make down the line.

Developing Realistic User Personas

Okay, so your objectives are locked in. Now, who are you testing with? This is where user personas come in. A user persona isn't just a generic description; it's a fictional character profile that represents your ideal customer, built from real user data and solid market research.

A well-crafted persona is your north star for recruitment. It makes sure you're testing with people whose real-life behaviors and motivations mirror your target audience. This is how you avoid collecting beautiful but ultimately useless feedback from the wrong users.

Your persona should dig into the key details that influence how someone would actually use your site.

Creating a few of these distinct personas helps ensure your test participants truly represent the different segments of your customer base.

Writing Effective Screening Questions

With clear objectives and personas in hand, the final piece of the puzzle is writing your screening questions. Think of this as your filter—it weeds out the unqualified participants and helps you zero in on the exact users you need. Good screeners are the unsung heroes of successful usability testing.

The goal here is to ask questions that reveal behaviors and experiences without tipping your hand. You have to avoid leading questions that make it obvious what the "right" answer is.

For example, a weak screener might be: "Are you a project manager who needs better software?" (This is way too leading; almost everyone will just say yes.)

A strong screener is more nuanced: "Which of the following best describes your role in managing projects at your company?"

- I am the primary decision-maker for software purchases.

- I use project management tools daily but don't make purchasing decisions.

- I rarely use project management software.

- None of the above.

This kind of question qualifies people based on their actual experience. By carefully laying all this groundwork, you're setting the stage for a usability test that delivers focused, actionable insights instead of a bunch of random noise.

Crafting Realistic Test Scenarios and Tasks

The quality of your website usability testing comes down to the quality of your tasks. This is where the real magic happens. It’s the difference between watching someone follow a paint-by-numbers instruction list and seeing their genuine, unfiltered problem-solving journey.

Simplistic commands like "Find the pricing page" or "Click the contact button" only tell you if your labels are obvious. They don't reveal how users actually think or behave when they have a real-world goal. To get insights that matter, you need to design scenarios that give users a purpose, not just a directive.

This means you have to shift from giving direct instructions to creating goal-oriented situations. You're building a realistic context that mirrors what truly motivates a user.

The Power of Goal-Oriented Scenarios

A strong scenario sets the stage and gives the user a problem to solve. It provides just enough context to make them feel motivated, encouraging them to use your site as a tool to hit their objective. This is how you uncover the "why" behind their clicks, not just the "what."

Just think about the difference in the feedback you’d get from these two prompts:

- Weak Prompt: "Add the blue t-shirt to your cart."

- Strong Scenario: "You're looking for a gift for a friend who loves hiking. Find a suitable t-shirt, check if it's available in their size (medium), and add it to your cart."

The second prompt is so much more powerful. It forces the user to navigate, filter, and make decisions just as a real shopper would. This is how you discover if your category pages are intuitive or if your size filters are a frustrating mess.

The best test tasks feel less like a test and more like a real-life situation. Your goal is to observe a natural user journey, not to guide them down a predetermined path. You want to see where they go, not where you tell them to go.

Crafting Scenarios That Elicit Natural Behavior

Building these scenarios is an art. You need to provide enough detail for the goal to be clear, but not so much that you're leading the witness. The key is to always focus on the user's end goal, not the specific steps you think they should take.

When you're developing your test protocol, it's a great idea to have a good list of open-ended questions examples on hand. These help you craft not just the initial scenario but also the follow-up questions that probe deeper into a user's thought process.

Here are a few more examples comparing weak tasks to strong, goal-driven scenarios that you can adapt for your own tests.

Scenario Comparison

Notice how the strong scenarios create a narrative? They give the user a reason to care about completing the task. This leads to far more authentic interactions and, ultimately, much more valuable insights for you and your team. This is a true cornerstone of effective website usability testing.

How to Recruit the Right Participants for Your Test

The insights you get from website usability testing are only as good as the people you test with. It’s that simple. Recruiting participants who genuinely mirror your target audience isn't just a "nice-to-have"—it's completely non-negotiable.

Think about it: if you sell high-end project management software to enterprise clients, what good is feedback from college students? At best, it's useless. At worst, it's dangerously misleading and could send your team down a development rabbit hole that leads nowhere.

The entire value of your test hinges on finding people whose real-life goals and struggles align with the user personas you've already built. This is the only way to ensure the problems you uncover are the ones actually hurting your business.

Where to Find Your Testers

So, where do you find these perfect participants? You have a few options, each with its own trade-offs. The right choice really comes down to your budget, your timeline, and just how niche your audience is.

Specialized Recruiting Platforms: Services like UserTesting, UserZoom, or Lyssna have massive panels of pre-screened testers ready to go. This is often the fastest route, especially if your demographic is fairly common. They handle all the logistics and payments, which saves a ton of administrative headaches.

Your Existing Customer Base: Sometimes the best source is right under your nose. An email blast to your mailing list or a small pop-up on your site can be incredibly effective. These are real people who already know your brand, making their feedback highly relevant. Just be aware that their familiarity can make them "expert" users, so they might breeze past issues a first-time visitor would stumble on.

Social Media and Community Forums: Channels like LinkedIn, focused Facebook groups, or specific subreddits can be absolute goldmines for finding niche audiences. If you’re building a tool for photographers, posting in a popular photography subreddit connects you directly with passionate, qualified people. This route takes more manual effort, but the quality of participants can be outstanding.

Balancing Cost and Quality

Platforms are quick but can get expensive. Using your own audience is cheap but might bring some bias. Social media is a solid middle ground that demands more of your time but offers fantastic targeting if you're willing to do the legwork.

The goal isn't just to find people—it's to find the right people. A small group of five perfectly matched participants will give you more actionable data than fifty people who don't fit your user profile. Quality always trumps quantity in recruitment.

For instance, if you're testing a new Shopify app for DTC fashion brands, paying a service to find verified Shopify store owners is probably a fantastic investment. Their feedback will be incredibly specific and valuable. On the other hand, if you're just testing a general-interest blog, a broad callout on social media is likely more than enough and far more cost-effective.

Determining Your Sample Size

A common myth that stops teams dead in their tracks is the idea that they need a massive, statistically significant sample size for usability testing. This "analysis paralysis" often prevents them from doing any testing at all.

But here’s the good news: for qualitative usability testing, you need way fewer people than you think.

A landmark study from the Nielsen Norman Group showed that testing with just five users is enough to uncover roughly 85% of the usability problems on a website. This principle makes website usability testing incredibly efficient and accessible, even for small teams on a tight budget. You can dig deeper into these powerful usability testing findings to see the data for yourself.

The logic is that you'll spot the same major roadblocks over and over again with the first few users. Sure, adding more participants will uncover some minor, edge-case issues, but the return on investment drops off fast. For most projects, aiming for 5-8 participants per user persona is the sweet spot. It gives you rich, qualitative data without breaking the bank or taking weeks to schedule.

Turning Test Data into Actionable Insights

Finishing your test sessions feels great, but the raw data you've gathered is just potential. The real value of website usability testing is unlocked when you translate those messy notes, user quotes, and screen recordings into a clear, prioritized action plan. This is where you transform observations into concrete improvements.

The goal isn't just to list problems. It's to build a compelling story, backed by evidence, that shows stakeholders exactly what needs to be fixed and why. A powerful analysis marries the emotional gut-punch of qualitative feedback with the hard logic of quantitative data.

Synthesizing Qualitative and Quantitative Data

Your first move is to consolidate everything. Start by re-watching your session recordings and going through your notes. I always look for recurring themes and pull out direct quotes that perfectly capture a user's frustration or "aha!" moment. A quote like, "I have no idea what this button does, I'm scared to click it," is far more powerful than simply noting "user hesitated."

While you're soaking in the qualitative feedback, you also need to tally your quantitative metrics. These are your hard numbers:

- Task Success Rate: What percentage of users actually managed to complete each task?

- Time on Task: How long did it take users to finish a task, on average? This can reveal hidden friction.

- Error Rate: How many users made a mistake, like clicking the wrong link first or going down a dead-end path?

Combining these two data types creates a complete, undeniable picture. For example, you might find that while 80% of users eventually found the return policy, it took them an average of two painful minutes, and your notes are full of quotes expressing frustration. That's a clear signal that the journey is broken, even if the destination is technically reachable.

The core of analysis is pattern recognition. A single user struggling might be an outlier, but when three out of five users get stuck in the exact same spot, you've found a significant usability issue that's likely costing you conversions.

This infographic lays out the core flow of the testing process, from the initial setup to the feedback that fuels your entire analysis.

As you can see, gathering feedback is the final step of the test itself, but it's the critical first step in turning that data into meaningful change.

How to Prioritize Your Findings

Once you have a list of identified issues, you'll quickly realize you can't fix everything at once. This is where prioritization becomes your best friend. Without a system, teams often gravitate toward the easiest fixes or the loudest complaints, which are rarely the most impactful changes.

A simple but incredibly effective method I've used for years is a prioritization matrix. It’s a straightforward way to categorize each issue based on how many users it affected (frequency) and how badly it messed up their experience (severity).

This framework removes the guesswork and helps you focus your development resources where they'll make the biggest difference for your users and your business.

Usability Issue Prioritization Matrix

An issue that is high-frequency and high-severity—like a broken "Add to Cart" button that blocked every single user—is an all-hands-on-deck, top-priority fix. On the flip side, a low-frequency, low-severity issue, like a minor typo one user spotted on an FAQ page, can be addressed when you have spare capacity.

For a deeper dive into the complete process, from planning to execution, check out our comprehensive guide to usability testing for websites.

Creating a Compelling Report

Your final step is to package your findings into a report that actually inspires action. Forget about creating a dry, academic document that no one will read. Your report should be visual, concise, and laser-focused on solutions.

I've found the most effective reports tell a story. Start with a punchy executive summary of the key findings, then dive into the details of each major issue. For each problem you present, follow this simple structure:

- The Finding: State the problem in one clear, concise sentence. (e.g., "Users could not find shipping cost information before starting the checkout process.")

- The Evidence: Back it up with both your data and a powerful user quote. (e.g., "4 out of 5 users abandoned the task. One said, 'I'm not giving you my email just to find out shipping is $20.'")

- The Recommendation: Provide a specific, actionable suggestion for a fix. (e.g., "Display estimated shipping costs directly on the product page using a zip code estimator.")

This structure makes it incredibly easy for designers, developers, and product managers to understand the problem, see the undeniable proof, and know exactly what to do next. This is how your website usability testing efforts translate directly into a better user experience and a stronger bottom line.

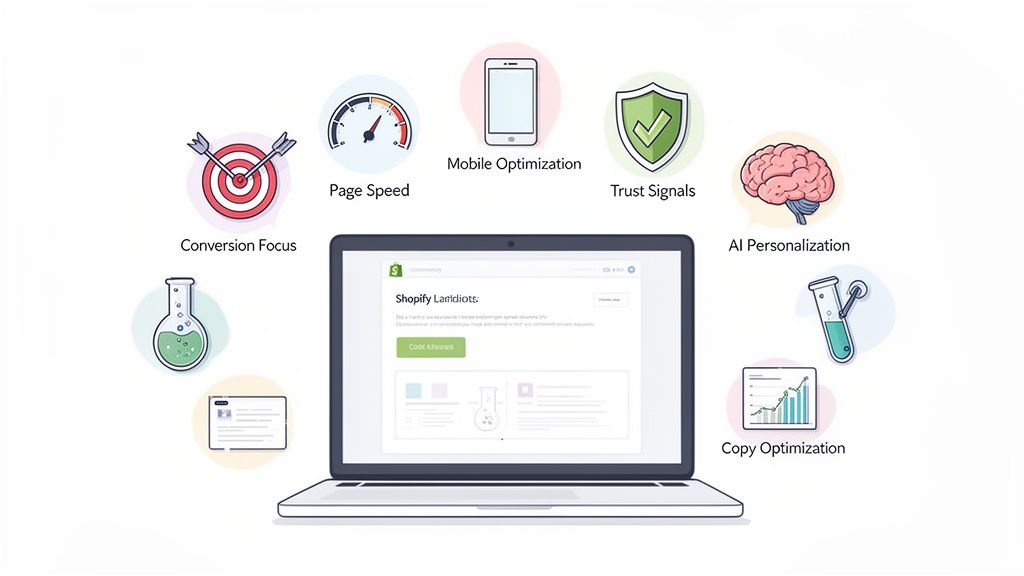

Tools That Make Usability Testing Easier

Look, you can run a usability test with just a person and a laptop. I’ve done it. But if you want to move faster and get deeper insights, the right software is a game-changer. The modern toolkit for website usability testing goes way beyond basic screen sharing, offering powerful platforms that handle everything from finding participants to analyzing the results.

Picking the right tool really boils down to your specific goals, budget, and the kind of testing you're doing. Are you planning in-depth, moderated interviews? Or do you need quick, unmoderated feedback on a design? The answer dictates the tool.

Different platforms are built for different jobs. Some are masters of unmoderated testing, while others pack a serious punch with their analytics features.

Platforms for Moderated and Unmoderated Testing

Your core tool will likely be a platform designed specifically for running the tests. These are the workhorses that manage the logistics of giving users tasks and recording what happens next.

UserTesting: A true giant in the industry. Its main draw is access to a massive, incredibly diverse panel of participants. It’s fantastic for both moderated and unmoderated tests and is my go-to when I need to find very specific user demographics on a tight deadline.

Maze: Known for being fast and integrating beautifully with design tools like Figma, Maze is a powerhouse for unmoderated testing of prototypes and live websites. It spits out quantitative data like bounce rates and misclick rates right alongside user comments, making it a favorite for product and design teams.

Lyssna (formerly UsabilityHub): This one is all about getting quick, focused feedback. It offers specialized tests like five-second impressions and first-click analysis, which are perfect for getting a gut check on design concepts before you invest heavy development resources.

Tools for Session Replay and Heatmaps

Sometimes the most valuable insights come from just watching people use your site naturally, without any specific instructions. This is where session recording and heatmap tools are worth their weight in gold. They show you exactly where real users click, scroll, and get stuck.

A usability test shows you how users perform tasks you give them. A session recording shows you what users choose to do on their own. Combining both gives you a complete, 360-degree view of your user experience.

Tools like Hotjar or Crazy Egg are the leaders here. They create visual heatmaps, giving you a "hot-or-cold" picture of where your users are clicking most. I’ve spent countless hours watching session replays—it's like looking over a user’s shoulder, revealing friction points you would never have thought to test for. A good screen recording software comparison can help you pinpoint the best option for what you’re trying to achieve.

Honestly, the best strategy is often a hybrid one. You might use UserTesting for those deep-dive moderated interviews, Maze for validating prototypes with quick feedback, and Hotjar to see how it all holds up in the real world. This multi-tool approach ensures you're collecting rich, diverse data at every step, which is the secret to building a truly user-friendly website.

Common Questions About Website Usability Testing

When teams first dip their toes into website usability testing, a few practical questions almost always come up. It's completely normal. Let’s tackle some of the most common ones to clear up any confusion before you dive in.

Cost is a big one. It's easy to assume usability testing will break the bank, especially when you hear about big-budget moderated tests with fancy recruiting agencies. But you don’t have to start there. Unmoderated testing platforms offer surprisingly affordable plans, and you can always recruit from your own email list or social channels for next to nothing. The investment really just scales with your needs.

Another common holdup is timing. When is the right time to test? The best answer is always early and often. Honestly, testing a rough wireframe or a simple prototype is far cheaper and easier than overhauling flawed code after you've already launched. You can—and should—test at any stage, from the back-of-a-napkin concept to a mature, live website.

How Many Users Do I Really Need?

This is the question I hear most often, and the answer usually surprises people. You really don't need a massive sample size to get powerful qualitative feedback. It's a common misconception.

As industry research famously shows, testing with just five users can uncover about 85% of a website's core usability problems. The goal here isn't to achieve statistical perfection; it's to identify recurring friction points and patterns that are tripping people up.

Don't let the hunt for a huge participant pool become a barrier. The insights you'll get from a handful of well-chosen users are incredibly valuable and will give your team more than enough to work on.

To get a better sense of the different ways you can approach this, it's worth exploring the various user experience testing methods available. Each one is suited for different project stages and budgets.

Ready to turn usability insights into higher conversions? The expert team at ECORN specializes in Shopify design, development, and CRO to build seamless eCommerce experiences that drive growth. Discover our flexible subscription packages.