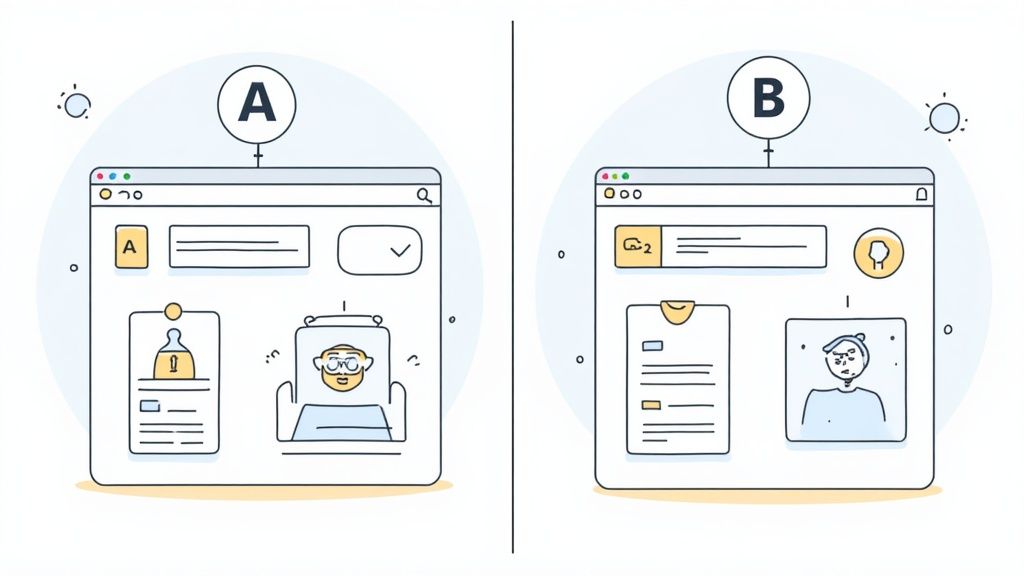

So, you want to get into A/B testing on your Shopify store? Smart move. At its core, it's just a straightforward way to compare two versions of a webpage to see which one makes you more money. It’s how you stop guessing and start making data-driven decisions that actually move the needle on your conversion rates and revenue.

Building a Foundation for Smarter Shopify Tests

Jumping into experiments without a solid plan is the fastest way to waste time and get muddled results. Before you even think about which A/B testing tool to install, the very first thing you need to do is set clear, measurable goals. This way, success isn't just a gut feeling; it's a number you can actually track.

Your goal is to shift from "I think this will work" to "I have evidence this might work." This foundational work ensures every test you run has a real purpose. And remember, A/B testing isn't an island; for the best results, it needs to be part of your larger conversion rate optimization best practices.

Pinpoint High-Impact Opportunities

Think of your analytics as a treasure map. It shows you exactly where to start digging. Dive into your Shopify Analytics and Google Analytics to find those pages that get tons of traffic but just aren't performing. These are your low-hanging fruit—your prime candidates for testing.

Keep an eye out for patterns like these:

- High Bounce Rates: Pages where visitors land and then leave almost immediately.

- Low Add-to-Cart Rates: Product pages that get plenty of eyeballs but few clicks on the "Add to Cart" button.

- Significant Drop-offs: Specific steps in your checkout process or sales funnel where you're losing a chunk of potential customers.

These problem areas are where small, targeted changes can deliver the biggest wins. If you want a refresher on the basics, we've got a whole guide on https://www.ecorn.agency/blog/what-is-a-b-testing that breaks it all down.

Understand the Numbers Game

For any A/B test to be worth your time, you need to get your head around two key concepts: statistical significance and traffic volume. Statistical significance is just the mathematical proof that your results didn't happen by random chance. Most tools shoot for a 95% confidence level, which means you can be 95% certain the results are legitimate.

I see this all the time: a store owner stops a test after two days because they see a huge 20% lift. That early spike is exciting, but it's often just statistical noise. You have to let the test run long enough for the data to mature before you can trust the outcome.

This brings us to traffic. You simply can't get reliable results without enough visitors. For instance, a store with a 3% conversion rate trying to detect a tiny 5% uplift would need a staggering 1.2 million visitors for each version. Even for a more noticeable 20% uplift, you'd still need around 74,000 visitors per variant.

This is why having enough traffic is non-negotiable. Putting the data first ensures your tests are driven by real evidence, setting you up for wins that truly count.

Crafting a Powerful Data-Driven Hypothesis

Every great A/B test I've ever run started with a strong, data-backed hypothesis—not just a random guess. Think of it as your educated prediction about what will happen when you make a change, and more importantly, why it will happen. Without a solid hypothesis, you're just throwing things at the wall, hoping something sticks.

A good hypothesis gives your experiment a clear purpose. It turns random tweaks into a structured learning process. We want to move beyond simple ideas like "let's make the button green" and start running strategic experiments that genuinely solve user problems.

The best way I’ve found to frame this is with the If-Then-Because model. It’s a simple format that forces you to connect a specific change to a measurable result, all backed by clear reasoning.

- If we implement this specific change…

- Then we expect this measurable outcome…

- Because of this reason rooted in data or user behavior.

A well-formed hypothesis is your experiment's north star. It clearly defines what you’re changing, what you expect to happen, and the logic behind it. This ensures every test generates valuable insights, whether it "wins" or "loses."

From Observation to Hypothesis

So, where do these brilliant ideas come from? The most powerful hypotheses are born from digging into data and observing real user behavior. You need to put on your detective hat and hunt for clues that reveal friction points in your customer's journey.

Your Shopify analytics are a decent starting point, but you have to go deeper. The real magic happens when you combine that quantitative data with qualitative insights to understand the "why" behind the numbers.

Here are a few of my go-to sources for inspiration:

- Heatmaps and Scroll Maps: These tools are fantastic for seeing where users are clicking (or not clicking) and how far they actually scroll. You might be surprised to find they're completely missing your key call-to-action.

- Session Recordings: I can't recommend this enough. Watch anonymous recordings of real people using your site. You might spot them rage-clicking a broken link or getting stuck trying to find a piece of information. It’s often painful to watch but incredibly insightful.

- Customer Surveys and Feedback: Just ask! Your customers will often tell you exactly what they find confusing or frustrating. Their words are a goldmine for test ideas.

Let’s say you’ve been watching session recordings and notice a lot of users hovering over the "Add to Cart" button but hesitating to click. That’s an observation that can spark a great hypothesis.

Example Hypothesis:

If we change the "Add to Cart" button text to a more direct "Buy It Now," then we will increase the add-to-cart rate because the new copy creates a clearer, more urgent call to action, reducing hesitation.

This whole process, from gathering data to analyzing the results, generally takes about 2 to 4 weeks. For a deeper dive into how experts structure the entire workflow, from hypothesis to analysis, check out this guide on A/B testing on Shopify at Speedboostr.com. Following a proven structure like this is what turns your testing program into a true growth engine for your store.

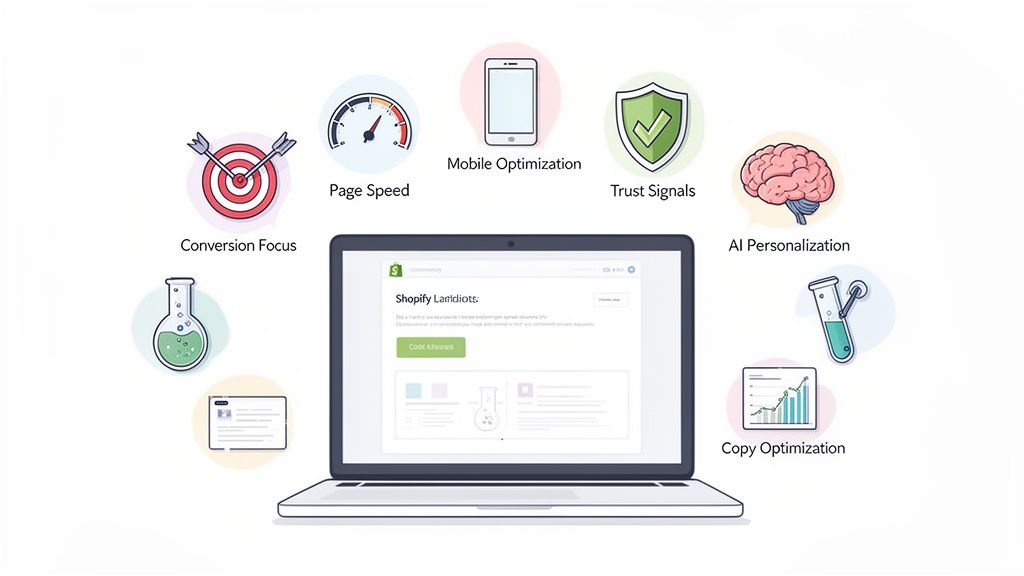

Choosing the Right Shopify A/B Testing Tools

Picking the right tool for A/B testing on your Shopify store can feel like a huge task. The App Store is flooded with options, ranging from heavyweight enterprise platforms like VWO to simpler apps built specifically for merchants. The truth is, the "best" tool really comes down to your team's comfort with technology, your budget, and how deep you plan to go with your experiments.

If you're just dipping your toes in, prioritize a tool with a user-friendly visual editor and a rock-solid Shopify integration. You shouldn't have to fight with code just to test a new button color. The goal is to find an app that makes setting up tests, picking your goals, and reading the results straightforward, no data scientist required.

Key Factors for Your Decision

When you start comparing options, it's easy to get fixated on the price. But the real cost of any tool is the time and energy it takes to actually use it. A cheap tool that’s a nightmare to manage will cost you far more in lost time and frustration.

Instead, zero in on these make-or-break factors:

- Ease of Use: Is it intuitive? Can your marketing team launch a test without needing to pull in a developer? For most Shopify store owners, a drag-and-drop visual editor is non-negotiable.

- Shopify Integration: How well does it play with Shopify? The best tools will automatically pull in your product info, revenue data, and other critical metrics. This saves you from tedious manual setup and ensures your tracking is spot-on.

- Key Features: Look beyond basic A/B tests. Does the tool offer more advanced options like split URL testing (great for entirely new page layouts) or personalization features that let you target specific customer groups?

- Pricing Structure: Pay close attention to how they charge. Is it a predictable flat monthly fee, or does the cost creep up as your traffic grows? Make sure the pricing model makes sense for your store's growth plans.

For a deeper dive into specific platforms, our guide on conversion optimization tools is a great resource for comparing features side-by-side.

Comparison of Popular Shopify A/B Testing Tools

To give you a head start, here’s a quick comparison of some of the most popular A/B testing apps for Shopify. This table breaks down their key features, pricing models, and overall ease of use to help you find the best fit for your store's needs.

Ultimately, the right choice depends on your specific goals. If you just need to test headlines, a simple tool will do. But if you plan on complex, multi-page experiments, you'll need a more powerful platform.

Don't Let Your Tool Slow You Down

A huge, often overlooked, consideration is how an A/B testing tool will affect your site's performance. Nearly all of these tools work by adding a snippet of JavaScript to your store, which can, if not implemented well, add precious milliseconds—or even seconds—to your page load times. This is a big deal, as the very user experience you’re trying to improve can be ruined by a sluggish site.

Your top priority here should be finding a tool that loads its script asynchronously. This is a fancy way of saying the testing script loads in the background and doesn’t stop the rest of your page from appearing. It's the key to minimizing any performance hit your visitors might feel.

Before you commit, dig into reviews and see what people are saying about site speed. A well-built platform will be engineered to have a minimal impact. Remember, the whole point of ab testing on Shopify is to boost conversions, not send potential customers running because of a slow-loading page.

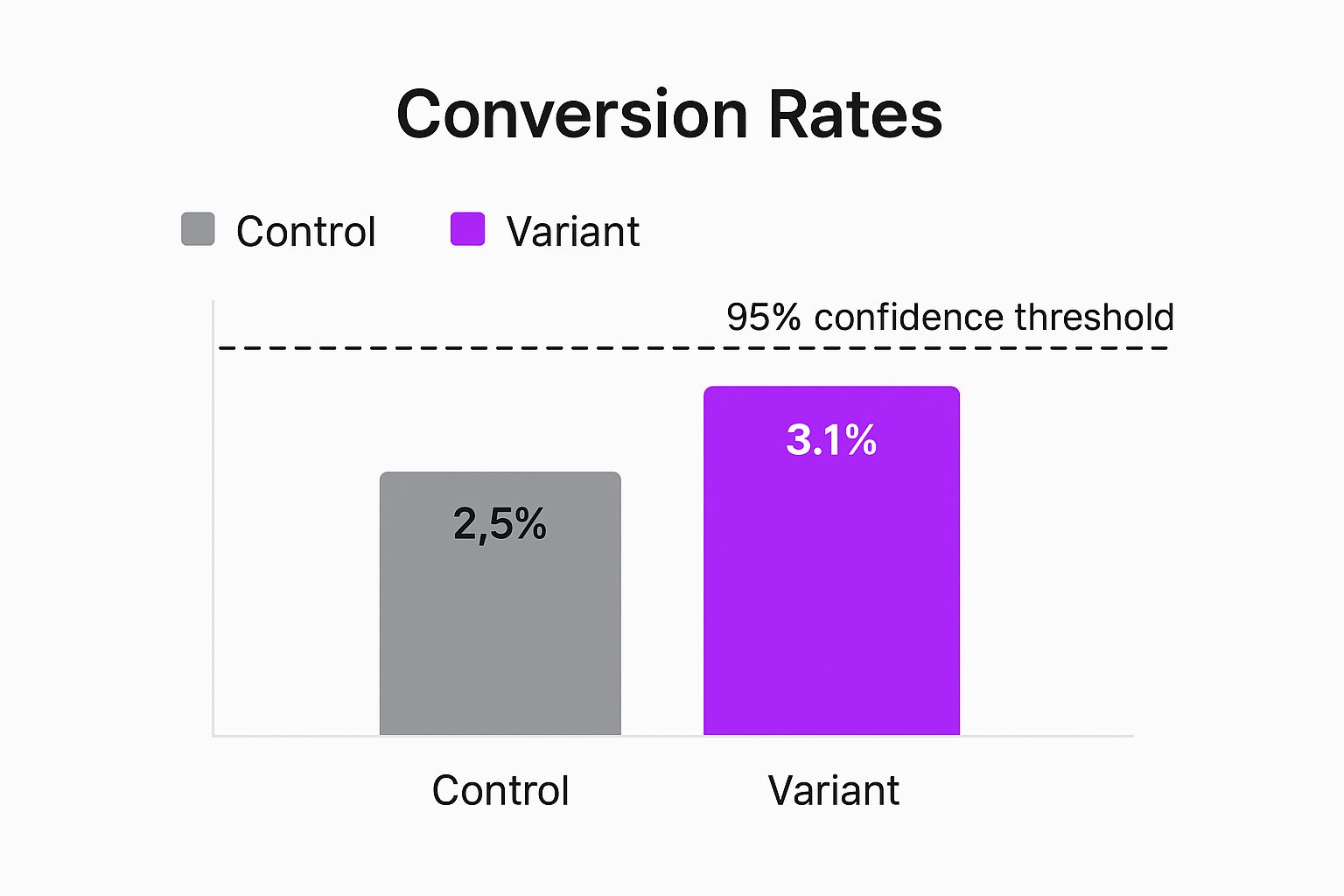

The chart below shows just how powerful a simple test can be. Here, a new variation of a page element clearly outperforms the original once the test has gathered enough data to be confident in the result.

You can see the variant hit a 3.1% conversion rate, a major lift from the control's 2.5%. This result validated the change with 95% statistical confidence, making it a clear winner.

Running the Test and Making Sense of the Data

You've got a solid hypothesis and a testing tool ready to go. Now for the fun part: setting up the experiment.

Inside your chosen app, you’ll define your "control" (your current page) and your "variant" (the new version you're testing). Even with a simple visual editor, you want to be meticulous here. Double-check that every change is exactly as you planned.

Next, you need to tell the tool what success looks like. This isn’t just a fuzzy goal; it’s a specific, measurable action. For a product page test, the primary goal is almost always a completed purchase. You can also keep an eye on secondary metrics, like "add to carts" or "reached checkout," which can offer a more complete picture of user behavior.

Finally, you’ll decide how to split your traffic. For a standard A/B test, a 50/50 split is the way to go. This sends half your visitors to the original page and the other half to your new version, giving you the cleanest comparison.

Let it Run: The Importance of Patience and Statistical Significance

Once you launch the test, the hardest part begins: leaving it alone. It's incredibly tempting to peek at the results after a day or two, see one version pulling ahead, and call it a winner. Resist this urge. It’s one of the most common and costly mistakes in ab testing on Shopify.

Early results are almost always unreliable and can swing wildly. A test needs time to mature.

For the results to be trustworthy, you need to hit two key milestones:

- A Solid Sample Size: Each version of your page needs enough visitors to iron out any random quirks in user behavior.

- Statistical Significance: Your tool needs to reach a confidence level of 95% or higher. This is the mathematical proof that your results are due to your changes, not just random chance.

I always recommend running tests for at least two full weeks. This captures the natural ebb and flow of traffic, including different shopping habits on weekdays versus weekends. Ending a test too early is how you end up implementing a "false positive"—a change that looked like a win but actually ends up hurting your business.

Trust the math, not your gut. The whole point of A/B testing is to get away from making decisions based on hunches. Wait for your tool to tell you the results are statistically sound.

Calling a Winner and Learning from the Outcome

The moment your testing tool confirms a statistically significant result, it’s time to dig in. You’ll see a clear comparison of conversion rates for the control and the variant, the percentage lift, and that all-important confidence level. If your variant won with 95% confidence or more, pop the champagne! You’ve found a data-backed way to improve your store.

But what if the results are flat? Sometimes, a test comes back "inconclusive," meaning there was no meaningful difference between the two versions. This isn't a failure—it's a finding.

An inconclusive result tells you something powerful: your change didn't move the needle. This is valuable information that prevents you from pushing a useless update and helps you come up with a better idea for your next test.

Whether you get a clear win, a loss, or a draw, every test is a lesson. A win puts money in your pocket. A loss shows you what your customers don't care about, steering your next experiment in a more promising direction.

Real-World Shopify A/B Testing Examples

Theory is great, but let's be honest—seeing how other stores actually used A/B testing to make more money is what really drives the point home. These examples aren't just hypotheticals; they show how a simple, data-backed idea can lead to a serious bump in conversions and revenue. Think of them as a practical blueprint for the kind of impact you can have.

A fantastic place to start testing is your product description. This is your sales pitch, but not everyone wants to read it the same way. Some shoppers are engineers at heart—they want every last technical spec. Others just want to know, "What's in it for me?" and they want to know it fast.

This exact challenge led to a huge win for Oransi, a store that sells high-end air purifiers. They tested a clever product description that showed the nitty-gritty technical details to visitors who craved them, while serving up a quick, punchy list of benefits to everyone else. The result? A massive 33.17% jump in their conversion rate in just four weeks.

Optimizing the Path to Purchase

Another goldmine for testing is the journey from cart to checkout. The smallest bit of friction here can send a potential customer running for the hills. Things like simplifying the layout, making shipping info crystal clear, or adding a few trust badges can work wonders.

Premium grooming brand Live Bearded nailed this. They ran an A/B/C test on their cart page, trying out three totally different layouts to see which one convinced more guys to hit that "Checkout" button.

A well-structured test in a critical part of the funnel, like the cart page, can produce outsized results. Small improvements at this stage have a direct and immediate impact on your bottom line.

By putting a few variations head-to-head, Live Bearded found a clear winner that boosted their checkout completion rate by an incredible 40%. You can dig into the specifics of how these brands ran their experiments and find more inspiration from these Shopify A/B testing examples on gempages.net. It just goes to show that if you pay attention to how your customers behave and test solutions, the payoff can be huge.

From Homepage Banners to Product Page Images

The truth is, opportunities for impactful tests are all over your Shopify store. Just think about the first impression your homepage makes or what your product images are really communicating.

Here are a few common testing ideas to get your gears turning:

- Homepage Hero Banner: Does a lifestyle shot of someone happily using your product beat a clean, studio shot of just the product? What about the copy? Does "Free Shipping" grab more attention than "20% Off"?

- Product Page Imagery: If you sell apparel, what happens if you add a short video of a model wearing the outfit? Or maybe just reordering the images to show the most popular color first could lift your add-to-cart rate.

- Navigation and Menus: Is your main menu a little cluttered? Testing a simplified navigation against your current, more detailed one can tell you a lot about how people are trying to find things on your site.

Every one of these tests starts with a simple question about what your customers do. By changing just one thing at a time and measuring what happens, you stop guessing and start building a real growth strategy. The trick is to keep your tests focused, form a clear hypothesis, and then let the data tell you what to do next.

Common Questions About Shopify A/B Testing

Even with a solid plan, it's natural to have questions when you first start A/B testing on your Shopify store. Getting good answers to these common sticking points is the key to building a testing program you can actually trust. Let's walk through some of the questions I hear most often from merchants.

The idea here is to get past the quick, generic answers and into the "why" behind them. Understanding the reasoning will help you sidestep common pitfalls and make smarter calls as you build out your store’s testing strategy.

How Much Traffic Do I Really Need?

This is the big one, and for good reason. While there's no single magic number, a solid benchmark to aim for is at least 1,000 conversions per variation, per month. Why that much? It helps ensure your results are statistically significant—meaning the outcome wasn't just random chance.

If you're getting fewer than 10,000 unique monthly visitors, getting a clear winner on smaller tests can be tough. In that case, your energy is often better spent on qualitative feedback. Think customer surveys, session recordings, and user interviews. Focus on implementing CRO best practices based on that feedback until your traffic picks up.

An inconclusive test isn't a failure—it's a learning opportunity. It tells you that the change wasn't impactful enough to alter user behavior, which is valuable information for refining your next hypothesis.

What Are the Best Elements to Test on a Product Page?

Product pages are absolute gold mines for testing. They're one of the final steps before a customer decides to buy, so even small tweaks here can have a massive impact on your conversion rates.

If you want the most bang for your buck, start by focusing on these high-impact elements:

- Call-to-Action (CTA): This is a classic. Try changing the button text ("Add to Cart" vs. "Buy Now"), experimenting with a bold new color, or shifting its placement.

- Product Imagery: See what resonates more with your audience—lifestyle photos showing the product in use, or clean, crisp studio shots on a white background? You could also test adding a short product video.

- Product Descriptions: Is your audience a fan of scannable bullet points, or do they prefer a more descriptive, storytelling paragraph? You might be surprised by what works.

- Social Proof: Don't just have customer reviews—test where you put them. See if making trust badges (like payment logos or security seals) more prominent gives customers that extra nudge of confidence.

Can A/B Testing Hurt My Store’s SEO?

I get this question a lot, and it's a smart one to ask. The short answer is no, it won't hurt your SEO—as long as you follow the rules. In fact, Google actually encourages A/B testing because it leads to a better user experience.

To keep everything above board, just stick to a few best practices. First, use a reputable testing tool that handles canonical tags correctly. This tag points Google to the original version of the page, so you don't get dinged for duplicate content. Second, never engage in "cloaking," which is showing one version of a page to Google's bots and a different one to your human visitors.

Finally, don't let your tests drag on indefinitely. As soon as you have a clear winner with enough data to back it up, implement the change for good and turn off the experiment. As long as you follow these simple guidelines, your testing and SEO efforts can work together perfectly.

At ECORN, we turn data-driven insights into real revenue growth for Shopify stores. If you're ready to stop guessing and start building a high-impact optimization program, explore our CRO services.